How to Compare AI Search Optimization Tools

Let’s explore how businesses can evaluate AI search optimization tools based on real visibility, not demo dashboards.

Brief

In my hands-on experience with AI search optimization, I noticed something almost immediately: not all tools are created equal. Many companies rush into “AI SEO” assuming it’s just a natural extension of traditional SEO. It isn’t.

AI-driven search behaves differently, surfaces information differently, and rewards content in ways classic ranking models never did. If you want real visibility in AI-powered environments, features alone won’t get you there. You need a strategy grounded in how these tools actually perform in practice.

Over the past year, I’ve tested multiple AI search optimization platforms—some focused on AI mentions, others on conversational prompts, and a few trying to do everything at once. Based on that experience, this is a practical, no-fluff guide to comparing AI search optimization tools the right way.

What Is AI Search Optimization?

AI search optimization—often referred to as Generative Engine Optimization (GEO)—is about improving visibility within AI-driven search environments, not just traditional search engines.

Where Google SEO is built around keywords, rankings, backlinks, and traffic, AI search platforms work differently. They generate answers, not result lists. Your content isn’t ranked-it’s selected as a source.

In simple terms, AI search optimization ensures your brand appears inside the answers users receive from AI assistants, chatbots, and large language model platforms. Visibility here is about credibility, relevance, and contextual alignment, not position one rankings.

Traditional SEO vs. AI Search Tools

When I first started working in this space, I assumed many traditional SEO metrics would carry over. That assumption doesn’t hold up.

Here’s the real difference:

SEO tools measure backlinks, keyword positions, SERP visibility, and organic traffic.

AI search tools track brand mentions, source citations, prompt performance, and inclusion in AI-generated answers.

A tool that excels at traditional SEO can still be nearly useless for AI search visibility. Understanding this distinction early saves a lot of wasted evaluation time.

Core Criteria to Compare AI Search Optimization Tools

Through testing, I’ve learned to evaluate tools across a few non-negotiable dimensions. These are the factors that actually impact strategy and visibility—not just how impressive the dashboard looks.

1. Coverage Across AI Engines

The first thing I check is which AI engines a tool actually monitors.

Some platforms only track ChatGPT or Bing AI. Others extend coverage to Gemini, Claude, Perplexity, and emerging conversational engines.

Why this matters:

Visibility in one AI engine does not guarantee visibility in another. Each model pulls from different sources and applies different weighting logic. A tool that only shows part of the landscape gives you a distorted view of reality.

Comprehensive engine coverage isn’t a nice-to-have—it’s foundational.

2. Visibility Metrics That Actually Matter

Metrics tell a story, but only if they’re the right ones. I focus on:

Mentions: How often your brand or content appears in AI responses.

Share of Voice: Visibility relative to competitors.

Citation Quality: Whether AI models reference authoritative, accurate sources.

Sentiment & Context: Whether your brand appears in the right framing and use case.

Tools that report raw mention counts without context aren’t useful for decision-making. Visibility without quality can do more harm than good.

3. Competitive Intelligence Depth

This is where the gap between tools becomes obvious.

Strong AI search platforms allow you to:

Compare AI visibility side-by-side with competitors

See which prompts competitors appear for

Identify content and authority gaps you can realistically close

This isn’t about vanity metrics. It’s about understanding why competitors are being referenced—and what you can do about it.

4. Prompt & Intent Analysis

Prompt intelligence is non-negotiable.

AI search is conversational by nature. If a tool doesn’t help you understand:

What users are actually asking

How intent shifts across phrasing

Which prompts trigger inclusion or exclusion

…then optimization becomes guesswork.

I’ve personally lost weeks early on by underestimating this. Once you see how much visibility depends on phrasing and intent, prompt analysis becomes the backbone of strategy.

5. Integration and Workflow Fit

Even the most advanced tool fails if it lives in isolation.

The platforms that last are the ones that integrate cleanly with:

Content workflows

Analytics systems

Reporting tools like Looker Studio or internal dashboards

I also look closely at team usability. If content, SEO, or product teams can’t intuitively act on insights, the tool won’t survive past the pilot phase.

6. Cost vs. Strategic Value

Pricing matters-but value matters more.

When evaluating cost, I look at:

Pricing transparency

Feature access by plan

Export and reporting rights

Onboarding and support quality

Some tools are expensive and worth it. Others are cheaper but end up costing more through manual work and poor insights. The real question is whether the tool saves time and sharpens strategy.

Common Mistakes I See Teams Make

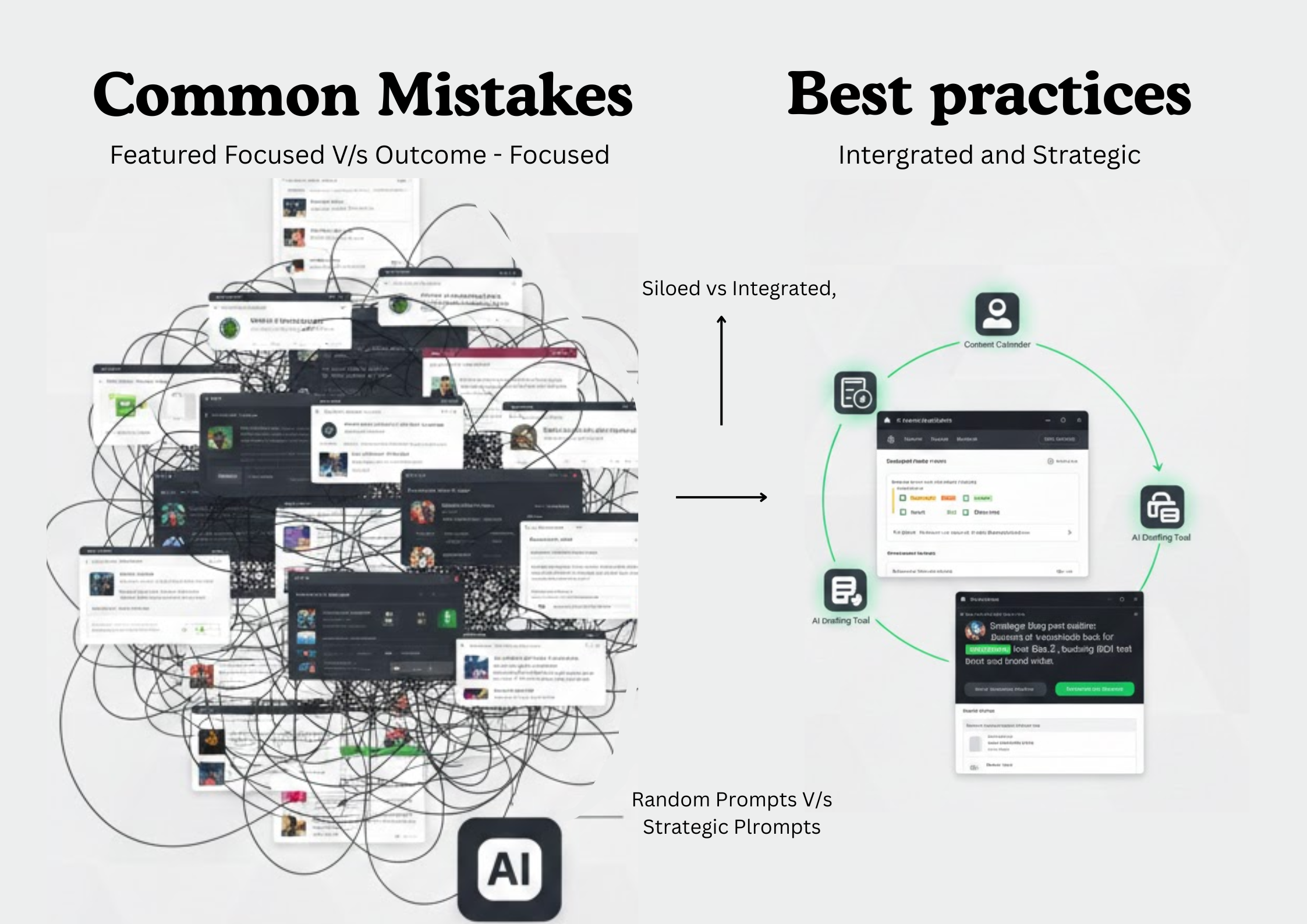

Across companies, the same patterns show up repeatedly:

Prioritizing features over outcomes

Content generation doesn’t equal visibility.Ignoring integration complexity

Siloed tools slow teams down.Skipping strategic alignment

Tools should support strategy—not define it.Underestimating prompt intelligence

Intent drives AI inclusion more than keywords ever did.

Avoiding these mistakes alone puts teams ahead of most early adopters.

How We Approach Tool Comparison at Nevrio Technology

At Nevrio Technology, we treat AI search tools as strategic infrastructure, not shiny add-ons.

Our evaluation process is hands-on and practical:

Define the visibility goal first (support, discovery, authority)

Identify the AI engines that matter to the audience

Benchmark competitors and prompt coverage

Test tools using real content, not demos

Score usability and workflow impact

Coverage and visibility intelligence always outweigh cosmetic features. Dashboards don’t move strategy—insights do.

Insider Tips from Real Testing

A few lessons learned the hard way:

Always test tools using your own content

Prioritize context over raw metrics

Check how often data is refreshed—AI changes fast

Evaluate how clearly competitor insights are surfaced

Favor tools that make insights easy to act on

These aren’t theory-based tips. They come from mistakes most teams only make once.

Final Thoughts

Comparing AI search optimization tools isn’t about chasing features or trends. It’s about understanding where your content appears, why it appears, and how to improve that visibility intentionally.

The right tool helps you see:

How AI systems interpret your authority

Where competitors are outperforming you

Which prompts and intents actually matter

At Nevrio Technology, we believe AI search optimization should be grounded in clarity, not hype. The tools you choose should support long-term visibility, not just impressive demos.

If you’re evaluating platforms right now, approach it like a strategist, not a shopper. The difference shows up fast.